Intro to Process Automation (Part 3) - Looking Under Camunda's Hood

The Fundamental Architecture and Why It Works Well

In the previous article, we looked at how to make a BPMN model executable and how to deploy it. Deployment in Camunda 8 is the point where a BPMN diagram stops being just a file and becomes a live process definition in the workflow engine.

In this article, we will look at what happens during a deployment, how Camunda 8 is structured internally, and why this architecture is modern and well-suited for today’s cloud-native systems.

What Happens During Deployment?

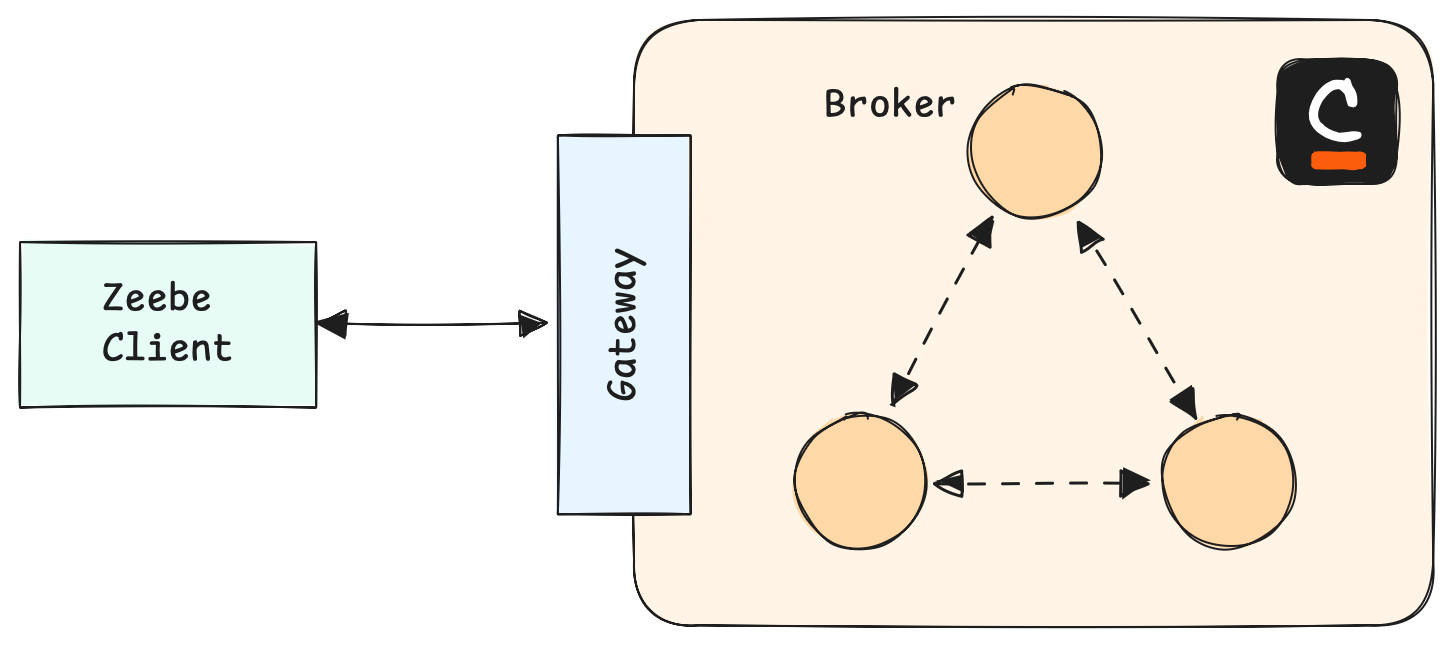

When a process model is deployed in Camunda 8, it is sent through the Gateway to the Zeebe cluster, where the engine manages the deployment. The Gateway forwards the request to the leader of partition 1, which serves as the cluster’s deployment authority. Zeebe parses and validates the BPMN XML, assigns a unique key and version, and writes the definition to the partition’s replicated event log. This ensures the model is stored reliably and remains available even if a broker fails. Each new deployment creates a separate version, allowing process definitions to evolve over time without interrupting running instances.

After the deployment is written to the log, the leader of partition 1 distributes the process definition to all other partition leaders. These leaders store the definition in memory so they can start new process instances locally without needing to retrieve the XML each time. This distribution ensures that every partition in the cluster can execute the latest process versions, while the actual deployment data remains safely persisted in the deployment partition.

This design keeps deployments fast, consistent, and fault-tolerant. By centralizing the persistence of definitions while sharing them across the cluster, Camunda 8 combines strong consistency with scalability. Every broker in the cluster can create process instances based on the latest deployed models, and if leadership changes within a partition, the new leader immediately has access to the definitions it needs to continue processing without disruption.

The Core of Camunda 8’s Architecture

At the center of Camunda 8 is Zeebe, a workflow engine that was built as a distributed system. Instead of relying on a single relational database, Zeebe uses an event log to persist every state change in a process. Each event, such as the creation of a task, an update of a variable, or the completion of an activity, is appended to the log. The current state of all workflows is reconstructed by replaying these events into an internal store. This approach ensures that the system can always recover its exact state, even after a failure, by replaying the log from the beginning.

Zeebe organizes execution across multiple brokers and partitions. A broker is a node in the cluster that manages workflow execution. The workflow space is divided into partitions, and each partition can host many process instances. Partitions are replicated across multiple brokers to ensure fail safety. One broker acts as the leader for a partition and processes commands, while followers replicate the data and are ready to take over if the leader fails. This replication strategy ensures that no workflow data is lost, even if a node in the cluster goes offline.

Scalability is achieved by adding more partitions and brokers to the cluster. This allows Zeebe to distribute workloads evenly and handle very large numbers of process instances in parallel. The system does not need to grow vertically by increasing the capacity of a single machine. Instead, it grows horizontally, which is a key characteristic of cloud-native architectures.

Zeebe itself is focused purely on orchestration. It creates jobs for service tasks and waits for external workers to claim and complete them. Workers connect through gRPC, subscribe to a task type, and fetch jobs when they are available. Once the work is done, the worker sends a completion signal back to Zeebe, which moves the process forward. Human tasks are managed in the same way: the engine creates the task, and Tasklist provides a user interface where people can complete it.

This design is inherently cloud-native. Zeebe is deployed as a set of containerized services, often orchestrated by Kubernetes. Brokers, gateways, and exporters can all be scaled independently, and replication ensures resilience against failures. By separating orchestration from execution, Camunda 8 avoids the limitations of monolithic systems and provides a flexible foundation for automation in modern distributed environments.

Surrounding Components

Camunda 8 is built as a modular platform around Zeebe. Operate provides a UI to inspect process instances, track progress, and resolve incidents. Tasklist is used for human tasks, allowing users to complete work assigned to them. Optimize offers analytics and reporting to identify trends and bottlenecks. These applications interact with Zeebe through exporters, which stream workflow data into dedicated storage that these tools can query efficiently.

For the vacation request process, Operate can be used to see the status of an individual request, Tasklist allows managers to approve or reject requests, and Optimize provides long-term statistics on approval times or rejection rates. Each component focuses on a specific use case, while Zeebe remains responsible for reliable orchestration in the background.

Why This Architecture Is Modern

Camunda 8 was designed for cloud-native environments from the start. Instead of embedding a workflow engine into a single application, Zeebe runs as a distributed service that can scale horizontally across clusters. It does not rely on a relational database but persists state in an event log, which enables strong consistency, replayability, and resilience in the face of failure.

This architecture aligns with how modern systems are built. It integrates through gRPC and REST APIs, works well in microservice landscapes, and can run on Kubernetes as a first-class citizen. By externalizing workers, Camunda avoids the pitfalls of monolithic BPM systems and instead embraces an orchestration model that coordinates distributed services, cloud APIs, and human interactions. This makes it particularly suited for organizations that want automation to grow alongside their digital platforms without being locked into rigid legacy tooling.

Looking Ahead

We now understand what happens during deployment in Camunda 8 and why its architecture is a strong fit for modern process automation. The next step is to look at how processes interact with external systems. In the following article, we will explore connectors and workers, comparing the built-in integration capabilities with the flexibility of custom implementations.